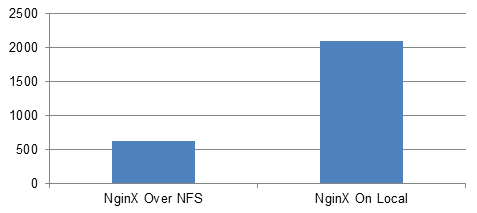

I am not a fan of NFS for production information. NFS is great for aggregating data from across multiple different machines, storing deployment files and other such administrative things. Serving static content? No. I haven’t blogged about it but I have talked about it several times at conferences. NFS, as a static content distribution mechanism is horribly slow. Here’s the chart to prove it.

Never mind the issues that you need to take into consideration.

I know a lot of Magento deployments use NFS so they can have static content accessible from multiple different servers and have it all “up to date”. But, like I said, I am not a fan of using NFS for production and so while it works, in my opinion, it is not optimal.

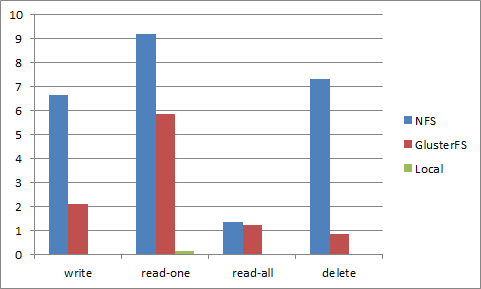

So I wanted to test GlusterFS to see provided a more optimal solution. So I wrote a quick test script to try it out. I tried creating multiple files, reading from one file multiple times, reading from the sequence of files multiple times and then deleting the files. The Gluster configuration is vanilla, as is NFS. Here’s the code I used to test.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 | $fs = array('/kschroeder/gfstest', '/mnt/gfstest', '/var/tmp/gfstest'); foreach ($fs as $f) { @mkdir($f, 0755, true); $startTime = microtime(true); for ($i = 0; $i < 500; $i++) { file_put_contents($f . '/test-' . $i, str_pad('', 0, 10240)); } $elapsed = microtime(true) - $startTime; echo "$f write $elapsed\n"; $startTime = microtime(true); $files = glob("$f/*"); $file = array_shift($files); for ($i = 0; $i < 10000; $i++) { file_get_contents($file); } $elapsed = microtime(true) - $startTime; echo "$f read-one $elapsed\n"; $startTime = microtime(true); foreach (glob("$f/*") as $file) { file_get_contents($file); } $elapsed = microtime(true) - $startTime; echo "$f read-all $elapsed\n"; $startTime = microtime(true); foreach (glob("$f/*") as $file) { unlink($file); } $elapsed = microtime(true) - $startTime; echo "$f delete $elapsed\n"; } |

/kschroeder/gfstest was an NFS mount, /mnt/gfstest was a GlusterFS mount and /var/tmp/gfstest was local.

Here are the results.

This is the elapsed time to conduct the individual tests so lower is better. GlusterFS did better than NFS on all the different operations. However, when compared with local speeds there was no comparison.

GlusterFS is definitely better, IMHO, than NFS. It does a lot of things like clustering, striping and replication out of the box and is very easy to configure all of those options without having to worry about magic or other components to make it work. For that reason I would definitely put it on my list of preferred solutions for handling static web content.

But what I want is a distributed, replicated static file storage mechanism that does client-side caching so my static file read performance is at least almost as good as local. And if my main file server goes down I don’t want my entire web site to go down. And it needs to be easy to install and manage so that merchants, who often do not have a lot of system administration experience, are able to do basic SA tasks. As much of an improvement that GlusterFS is, out of the box it doesn’t seem to be solve the problem fully.

The search continues (and I do have some other possibilities I want to test).

Comments

Obdurodon

Hi, GlusterFS developer here. Glad you’re enjoying the project. Unfortunately, client-side caching is a bit of a hard problem in a fully distributed system. Part of the problem is even figuring out what people want. If people want fully consistent caching, then you need some way to deal with the case where one client is trying to write something that’s in another client’s cache. At this point it’s fairly well understood how to do that when there’s a single server (like most NFS implementations) but it becomes *much* harder in a system like ours with many servers.

Something that’s a lot easier to do is non-consistent caching. Clients cache what they want, and if somebody else changed the original while it was cached then too bad – that’s the application’s problem. This type of caching, with time-based cache expiration, works pretty well for workloads where data once written is rarely if ever written again but might be read a lot. Maybe some day I’ll get a chance to work on that within GlusterFS itself. Meanwhile, something you might try is FS-Cache with the NFS client and our built-in NFS server. That way you still get a scalable back end, replication, etc., but you also get client-side caching. No, I haven’t tried it myself, so caveat emptor. 😉

If there’s any other way I or others on the project can help, just let us know. Thanks for the kind words, and good luck with your deployment.

kschroeder

Obdurodon Thanks for the info. FS-Cache is one of the things I want to take a look at. For the type of workloads that I see I wouldn’t need consistency so much as I need cache invalidation. Many Magento users use NFS as the base to store user files such as product images. If someone saves one of those images it is not the end of the world if a few milliseconds of delay occurs while the cache is invalidated on multiple machines. There are multiple solutions that can be implemented to handle that, but for most Magento customers they represent an infrastructure complexity that many merchants shouldn’t take on. So, for the scenario that I tend to work in, working out of the box so that I can have files cached across multiple machines is one that I would prefer.

That said, I really do like what has been done with GlusterFS. While it doesn’t solve the problem I’m trying to solve it’s got some really neat things in it.

kschroeder

Obdurodon Oh, and I should also mention that the reason why I did this test was because I ran into a customer who was using GlusterFS instead of NFS and I wanted some data to see how it compared. So I wasn’t just pulling it randomly out of a stack of possible solutions.