… was what I was going to write about. I’ve been meaning to come up with a solution where I could do a combination of regular HTTP requests combined with Web Sockets combined with Redis to send messages easily back and forth between the backend and the frontend. My thought was that I might be able to take Nginx, which has WebSockets proxy support and combine it with Redis to create a means of facilitating communication between a frontend browser user and any backend asynchronous tasks that might have pertinent messages to send to the frontend.

There are definitely ways of doing it. But, basically, all of them involve having a web frontend, Redis, and then a bunch of Node code that you have to write and maintain. The Node block was one that has held my research on this back for several months. I just don’t want to have yet another service running, that requires yet more code for me to write, in yet another programming language.

Fast forward to this week. I started working on a load testing mechanism that allows Magium tests to be used as a means of load testing. Obviously you cannot generate significant loads from a browser test on one server and so the solution REQUIRED some form of messaging and synchronization. In my searching I found that ActiveMQ could be used as an embedded messaging service. Well, I know Java, and I know that ActiveMQ has WebSockets support (so I could control the tests from a browser), so “why not?” I figured.

It turns out that it actually all worked really, really well.

So it turns out that Nginx + this + that + plus the kitchen sink, wasn’t actually necessary. Everything could be handled within two distributables from Apache: Jetty and ActiveMQ (with Camel which acts as a bridge between the two). With that I get WebSockets, HTTP/1 & 2 (HTTP push maybe?), and an integrated async messaging system (I LOVE async!). Add an ESB like MuleSoft and you’re set for almost any kind of communication.

But I’m getting ahead of myself.

While I intend to answer deeper questions of how Jetty fits into a larger pattern of advanced web applications, including running PHP, I don’t have the time to do that today. I owe some folks some deliverables and so I will continue my investigation into using Jetty in another post.

What I want to discuss at this point is static throughput. Java has the reputation of being slow and, in particular, bloated. I believe that this reputation is somewhat deserved. But like many who assert that the filesystem is slow sometimes the bigger picture is a little more complicated. But if Jetty turned out to be “enterprisey” (which means big, bloated and slow) then this wouldn’t work as a solution.

So like a good little boy, I tested to see if Jetty would be a blocker on the performance/bloat front.

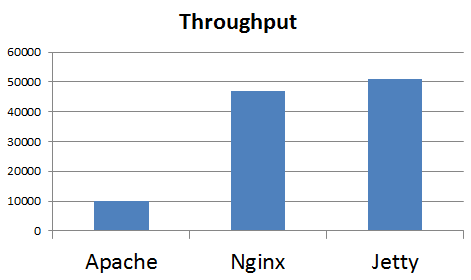

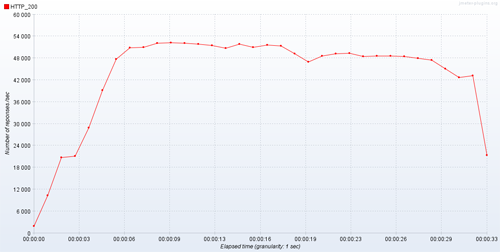

The first graph is the test results. The test was retrieving the favicon.ico file on a 4xIntel(R) Xeon(R) CPU X3430 @ 2.40GHz. with 16GB RAM. All webservers were configured to run on their defaults. That means that these numbers could be improved. In fact, Nginx could possibly double its throughput (but, then again, so could Jetty, for reasons we’ll see in a bit). Apache is pretty much at its limit.

What? Jetty was faster than Nginx? Yep. In this test it was. I was working under the assumption that, given the ratio of static content to PHP requests in a Magento site, I would consider Jetty a contender if it was simply better than Apache. I was most definitely NOT expecting it to be faster than Nginx.

So lets get down into some of the details.

Let’s dispense with Apache first, since we all know why it performed like this (though, 10k requests per second is definitely not bad).

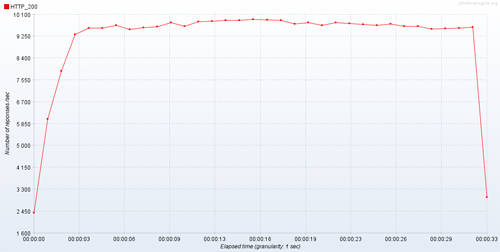

Apache hit the throughput limit pretty quickly and never got above 10k requests per second

In terms of system resource usage, the usage pretty much maps to throughput. The throughput was limited by CPU.

No surprises there.

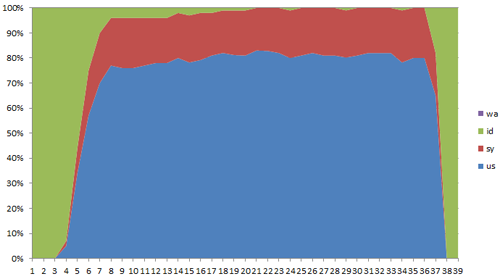

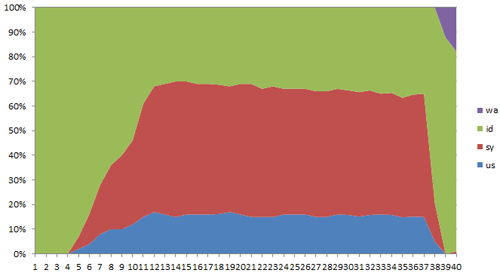

Next up is Nginx. Nginx was configured with worker_processes set to auto, which configured itself to 4. The result of the throughput test is this:

Throughput went up very quickly and was serving upwards of 47,000 requests per second.

This is the system usage during the Nginx test. Clearly system time was the driver for throughput. It matches the throughput almost exactly. Though, I wonder if this is a function of network saturation because of the downward slope of the throughput for system time. If the throughput decline was due to increased system time then the system time should increase while throughput decreases. Instead we see them in lock step. For that reason I believe that the overhead of additional HTTP payload (headers, and such), would explain the decline. In other words, Nginx could probably do better.

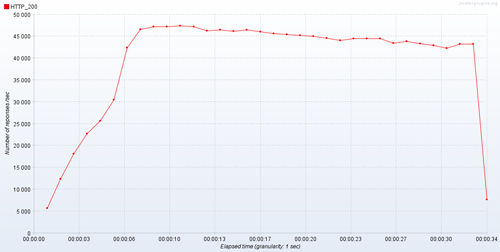

But that’s not really the point of all this. The point of all this, in my mind, was that Jetty was able to keep up with (and surpass) Nginx in the static throughput department.

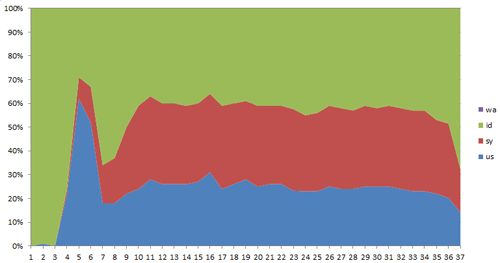

The peak throughput was around 53,000 requests per second, compared to Nginx’s 47k.

System usage was similar to Nginx, perhaps even a little better. Nginx hit 70% and stayed there whereas Jetty peaked at 70%, dropped to 37% and then hit 60%.

In short, I was dumbfounded when my first test graphs were being rendered. I had figured that there was an error; it was not what I was expecting at all.

Does this mean that you should switch to Jetty?

No.

Jetty has a lot of complexity and most Magento (my focus) system admins do not have the experience to administer Jetty. If you have standard needs, Nginx + PHP-FPM is most likely the best option still.

However, do you have an application that needs WebSockets or some form of messaging (such as JMS)? These test results make for some interesting thoughts.

That highlights one of the things I like about Java. I like the language syntax, but what I like about Java more is that a lot of the stuff that “feels” cobbled together in the PHP world already exists in the Java world. Instead of writing yet another abstraction layer in PHP that does about 20% of what you need, perhaps the Java infrastructure running on Jetty gets you 80% of the way there instead of 20%.

But, like I said, I have deliverables I need to deliver and so I need to wrap up this blog post. There are three questions I still want to answer.

- How does this Jetty work wit PHP-FPM? I expect this to be a slam dunk since PHP-FPM works fine with Nginx.

- How can a PHP developer put this all together and give themselves more features than they could ever know what to do with?

- I still need to directly answer the question of easy integration of WebSockets and messaging. I expected that either Jetty + ActiveMQ or Jetty + Redis will provide an out-of-the-box(ish) solution.

Comments

No comments yet...